Swarm a more powerful platform, but it can be enhanced by taking advantage of open source NGINX and even more by using NGINX Plus. The ability of NGINX Plus to dynamically reconfigure the backend containers to load balance using DNS, and the visibility provided by the Status API, make for a very powerful container solution. A way to achieve that is to use NGINX as a reverse proxy by defining one or more public-facing nodes. We’re going to see how to create two service containers that are replicated across several nodes. These services will be a simple Apache and NGINX web applications.

All the environments variables were automatically configured when you configured the docker client to access the Swarm , above. OK, we are almost done. Now it is time to start the web services. Load balancing by the Swarm load balancer to the same simple web app backend. Example: Run an nginx web server service on every swarm node.

If you run nginx as a service using the routing mesh, connecting to the nginx port on any swarm node shows you the web page for (effectively) a random swarm node running the service. Networking is namespaced in docker , and part of that namespace is the loopback device, aka 127. NGINX is the most widely deployed Kubernetes Ingress controller. Here are the commands I have used for this.

Setting up the load balancer Using a load balancer outside of the Swarm allows an easy way to connect to your containers without having to worry about the cluster nodes. The topology is similar to the one in the NGINX blog - nginx. My question is whether it is even possible to get a swarm to work with websockets? In order to access the appx cluster, you need to map the appx.

Open the Windows hosts file and add these entries: 127. You may add the nginx container to multiple application specific networks, or you may create one proxy network and attach all applications to that network. If you are using ECserver to run you docker swarm , make.

Nginx service will not start. If Swarm isn’t running, simply type docker swarm init in a shell prompt to set it up. So basically it’s all about deploying multiple containers on multiple nodes. Then load balancing and scaling the number of instances will be shown. Creating Web API app for the role.

The services will both be web services, hosting simple content that can be viewed via web browser. The docker -engine instances which participate in the swarm are called nodes. So, the workload in divided by the nodes in the swarm.

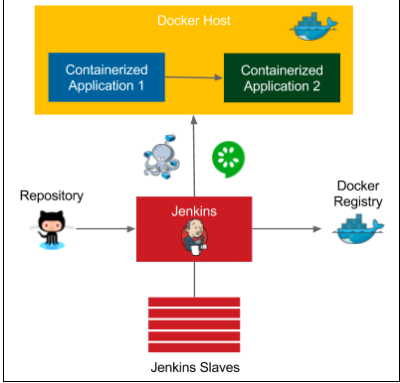

In this article, we are going to deploy and scale an application. Docker can also be used with NGINX Plus. A third node (the NGINX Host in the figure) is used to host a containerized NGINX load balancer, and the load balancer is configured to route traffic across the container endpoints for the two container services. As a stand-alone solution, the older variant requires a slightly more complex set-up with its own key-value store. The current node becomes the manager node for the newly created swarm.

Manager nodes and worker nodes A swarm is composed of two types of container hosts: manager nodes, and worker nodes.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.