Number of samples per gradient update. If unspecifie batch_size will default to 32. Do not specify the batch_size is your data is in the form of symbolic tensors, generators, or keras.

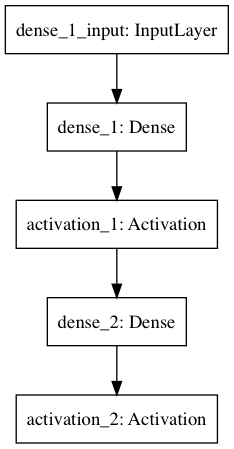

Sequence instances (since they generate batches ). If all outputs in the model are name you can also pass a list mapping output names to data. Xtrain, Ytrain, batch_size = 3 epochs = 100) Here we are first feeding the training data(Xtrain) and training labels(Ytrain). This will be needed in later sections. The network has one input, a hidden layer with units, and an output layer with unit.

This computation can also be done in batches , defined by the batch_size. In keras , both model. My understanding is that batch size in model. Does it need to be equal to the one used by model.

How big should batch size and number of. For the batch_size in model. Keras LSTM Batch Size and model. According to the documentation, the parameter steps_per_epoch of the method fit has a default and thus should be optional: the default None is equal to the number of samples in your dataset divided by the batch size , or if that cannot be determined. What is batch_size mean?

The entire training set can fit into the Random Access Memory (RAM) of the computer. D tensor with shape: ( batch _ size ,, input_dim). As per documentation, my training data and labels have shape (2 2 1) representing samples with time steps and one feature. To my understanding, batch size in input tensor is the amount of examples you give for training or predicting. I need to specify batch size or will all samples be sent as one batch by default?

X, trainY, batch _size=3 epochs=50) Here you can see that we are supplying our training data ( trainX ) and training labels ( trainY ). Sequential model consisting of custom layers subclassing tf. When writing custom loops from scratch using eager execution and the GradientTape object. Supports all values that can be represented as a string, including 1D iterables such as np. TensorFlow features (eager execution, distribution support and other). It can theoretically feed batches of pairs indefinitely (looping over the dataset).

If you want to run a Batch Gradient Descent, you need to set the batch_size to the number of training samples. GitHub Gist: instantly share code, notes, and snippets. All gists Back to GitHub. I apologize for the inconvenience. However, when I apply model.

I realize that my network does not use batch_size. How to use a stateful LSTM model , stateful vs stateless LSTM performance comparison.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.