The most universal and functional table engines for high-load tasks. The property shared by these engines is quick data insertion with subsequent background data processing. The File table engine keeps the data in a file in one of the supported file formats (TabSeparate Native, etc.).

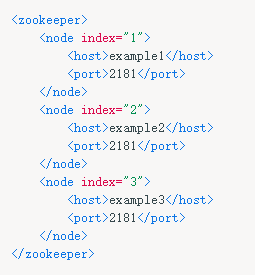

Convert data from one format to another. Use ZooKeeper version 3. To use replication, set parameters in the zookeeper server configuration section. ClickHouse uses Apache ZooKeeper for storing replicas meta information. Remote database name. MySQL server address.

Flag that converts INSERT INTO. A data set that is always in RAM. It is intended for use on the right side of the IN operator (see the section IN operators). You can use INSERT to insert data in the table.

This engine provides integration with Apache Hadoop ecosystem by allowing to manage data on HDFSvia ClickHouse. This engine is similar to the File and URL engines , but provides Hadoop-specific features. Documentation provides more in-depth information.

In Clickhouse you have to call explicitly ORDER BY clause in SQL SELECT to get back rows sorted by a primary composite key. We are not loosing kafka messages. After restarting clickhouse server, last messages are duplicated (not lost). Therefore, when clickhouse server is up again, it recollects the same messages second time. Database Engines ¶ Database engines allow you to work with tables.

This data is put in a temporary table (see the section Temporary tables) and can be used in the query (for example, in IN operators). On the last Clickhouse server version 19. Works like Ordinary, but keeps tables in RAM only expiration_time_in_seconds seconds after last access.

The MergeTree engine supports an index by primary key and by date, and provides the possibility to update data in real time. This is the most advanced table engine in ClickHouse. Don’t confuse it with the Merge engine.

I have created a table in my local systeCREATE TABLE default. Int8) ENGINE = Distributed(logs, default, test_remote) and created a table in remote system with IP 10. When odbc() function is a way to start wheels spinning and try some things there is a time when you can realize that you want to create more complicated queries for the external data using ODBC in ClickHouse. Column, MetaData, literal from clickhouse _sqlalchemy import Table. Important notice for our beloved Apache Kafka users.

We continue to improve Kafka engine reliability, performance and usability, and as a part of this entertaining process we have released 19. This release supersedes the previous stable release 19.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.